Background

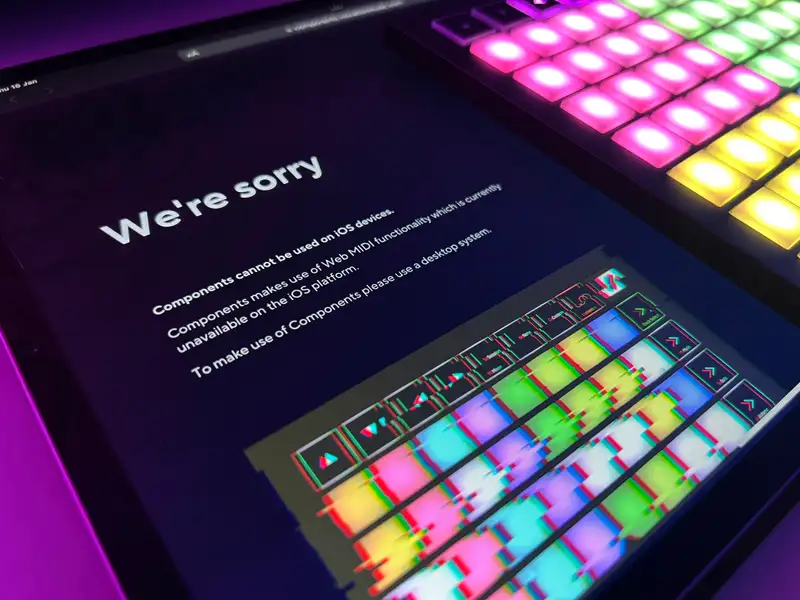

Components is the editor for Novation’s synths and MIDI controllers, it runs in the browser and uses a feature called the "web MIDI API" to communicate with your devices. Being web based is awesome because it runs everywhere without needing to install more native device manager software and drivers. I recently got one of Novation’s launchpad controllers, and was excited to set up some custom modes. I connected it to my iPad Pro, opened its proudly “desktop class” browser, and was greeted with an error saying components won’t work on iOS because safari doesn’t have web MIDI support. Great, thanks Apple:

“Just download chrome” the ancient wisdom whispered in my ear. However, Apple doesn’t currently let browser apps provide their own engine, they’re all just skins on top of the same MIDI-less WebKit engine that powers safari.

Sorry babe, fruitcorp said our light-up rectangles can’t be friends…

There’s an app on the app store claiming to enable web MIDI on iOS, but testing it reveals it just straight up doesn’t work at all and seems to do absolutely nothing 👍

But it did get me thinking: I know enough about MIDI, surely I could make my own app? I didn’t know anything about swift or iOS development but this seemed like the perfect project to learn. And with iOS restrictions lifting in some regions, I decided to tackle the project now while it still maybe has some use!

Initial Rough Implementation

My plan, having never done something like this before, was to traverse through the documentation for the web MIDI API and just implement every part myself in javascript, which I'll inject into a standard iOS web view before a page loads. My code will pretend to be the missing features, and communicate with swift code that'll implement the actual MIDI communication with CoreMIDI. All of this should look totally invisible to the page, so it should work with any web app that uses web MIDI:

The surface area of the API of the Web MIDI API is actually pretty small, all I have to replicate is:

- Functions:

requestMIDIAccess() - Events:

MIDIMessageEvent,MIDIConnectionEvent - Other classes:

MIDIAccess,MIDIPort,MIDIInput,MIDIOutput,MIDIInputMap,MIDIOutputMap

I’m ignoring MIDIInputMap and MIDIOutputMap for now, they both look like a subset of Map so I’ll just use that. This technically isn't spec compliant because pages have access to the other Map methods that the custom MIDI ones prevent you from using. However, I think if pages are getting funky with modifying MIDI port info that’s actually horrible broken code anyway and not my problem TBH!

The rest of the javascript pieces came together pretty quickly, the MIDIPort objects handle connections with a little state machine that handles things like resuming connections to devices that were disconnected during use. I chose to implement all of this connection logic on the javascript side because it’s the language I’m more comfortable with, and it co-locates the state management with the event code that tells pages about device changes.

Honestly, the hardest part of this initial rough version was that most of the classes can emit events (state changes and MIDI messages). Handling event listeners and setting oneventname handler properties needs to work correctly or pages will break. I assumed extending the stock EventTarget class would work, but it had issues on some pages. I ended up just implementing it myself, detecting assignment of handler properties by wrapping page-facing values in a proxy object. This probably isn’t the most elegant solution, but the proxies were also extremely useful for debugging communication so I went with that route. I should come back and clean this up at some point!

On the swift side, there was a lot of duct-taping together random snippets of sample code until I got a browser displaying a page, then a list of MIDI devices, then a live updating list, sending device state updates to the page, forwarding messages between the page and devices, etc… Again I’m no swift expert, but I seem to have stumbled onto a sore point of iOS development right up front, the CoreMIDI API uses c-style pointer and callback values which were a pain to get to compile and find the right casting and conversion syntax. But other than those hurdles, swift seems nice?

With all that, the very first signs of life were visible, and I was able to play some web based synths and use a MIDI monitor app on my iPad:

Running Novation Components

At this point the app is definitely kinda working, but a real success would be to prove that apps like Novation Components that use complex bidirectional communication work too. Testing Components initially, I still got hit with the "we’re sorry but iOS isn’t supported" screen, it’s likely that testing for web MIDI support is just one of many platform checks they're doing...

So, I loaded Components into Chrome, put the apology text into dev-tools search, and found the checks that are locking us out:

get browserNotSupported() {

if(this.featureFlags.flags["enable-ios"]){

return this.webMidiNotSupported

}

return this.browserWidthNotSupported

|| this.webMidiNotSupported

|| this.screenWidthNotSupported

|| this.iOS

}

Oh... there's already a flag to enable iOS? Maybe Novation is testing upcoming support for other browser engines on iOS, or maybe they've experimented with something similar to this while exploring iOS distribution? Who knows, but it's neat, and might give us a way in if we can trick components into thinking this flag is provided. One more ctrl-f in the source code shows where the flags are pulled in from an API, which returns a list of active features:

{"features": ["forced-login-experiment", "launchpad-master-channel", "launchpad-x-8-custom-modes", "hub-page", "show-all-products", "undo-stack", "enable-flk-faders", "enable-lk4"]}

Well, the actual function used to request this data is obscured behind like a minute more obfuscated javascript than I can be bothered deciphering, but given that the line looks like this:

(await (0, o.default)(COMPONENTS_FEATURE_URL)).json();

I'm just going to guess it's a fetch call because of the json() on the end. If it looks like a duck and all that :3

What if I add some code to my injected script that swaps out the definition of fetch before Components uses it, replacing it with one that always adds the flag we need if we ask for that features URL?

const originalFetch = window.fetch;

window.fetch = async (url, options) => {

if (url == COMPONENTS_FEATURE_URL) {

const response = await originalFetch(url, options);

const data = await response.json();

data.features.push('enable-ios');

return Response.json(data);

}

return originalFetch(url, options);

};

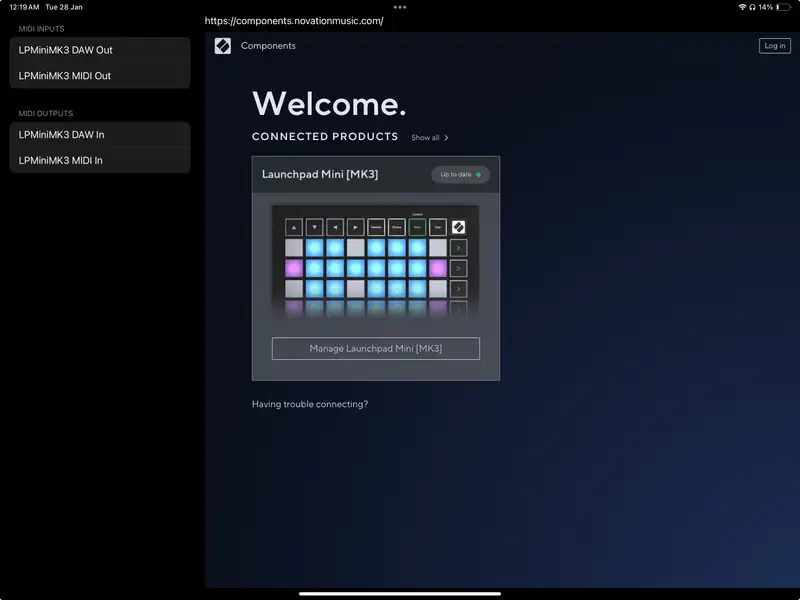

Oh wait what huh, that actually works, Components shows the home page:

I guess that means my MIDI code is passing their feature support tests already? I was expecting to have to fix more by this point!

At this stage, Components lets me select see my connected launchpad, and open its editor, but while testing it out, sending layouts to the launchpad causes a crash. See, I knew something would break…

Turns out, web MIDI allows sending arbitrary length "sysex messages" for vendor specific message data, which my swift code was trying to cram into fixed length packets, oops. Adding some chunking and generating the packet lists properly fixes this:

// Given: `bytes`, `outputPort`, and `outputEndpoint`, send data to a device...

var remainingBytes = bytes

while !remainingBytes.isEmpty {

let chunk = Array(remainingBytes.prefix(256))

remainingBytes = Array(remainingBytes.dropFirst(256))

var packet = MIDIPacket()

packet.timeStamp = 0

packet.length = UInt16(chunk.count)

withUnsafeMutableBytes(of: &packet.data) { buffer in

buffer.copyBytes(from: chunk)

}

var packetList = MIDIPacketList(numPackets: 1, packet: packet)

MIDISend(outputPort, outputEndpoint, &packetList)

}

Hope that snippet above saves someone some time. The CoreMIDI API documentation is certainly one of the documents of all time, and fixing a lot of these issues while trying to wrap my head around a brand new language and platform was a pain.

With one direction of commutation now fixed, I’m able to send custom layouts from iOS to the launchpad seamlessly. But when I continued testing and tried to read a custom layout from the launchpad, I got a weird corrupted layout with only a few buttons?

Further exploration shows that while the chunking code that sends data from the page to the device is working fine, the device sending messages back don’t appear in CoreMIDI the same way. When sending long SysEx messages to a device I’m able to pack multiple MidiPacket items into a single MidiPacketList. But when receiving SysEx from the launchpad, each “chunk” of SysEx data arrives as its own MidiPacketList with one singular packet:

To fix this, I implemented a buffer that keeps track of all the current message chunks and only sends the whole buffer to javascript when it’s terminated by the correct byte indicating that sysex has ended. I’m not super sure if this always works, because I found some discussion online about CoreMIDI concatenating multiple messages into one chunk (ew), but I haven’t seen that happen and those threads are ancient so I think it’s fine?

With these SysEx tweaks, we have full bidirectional communication working and can use Components to both save and load custom modes on the Launchpad through an iOS device! It works, we did it gang! 🎉